Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

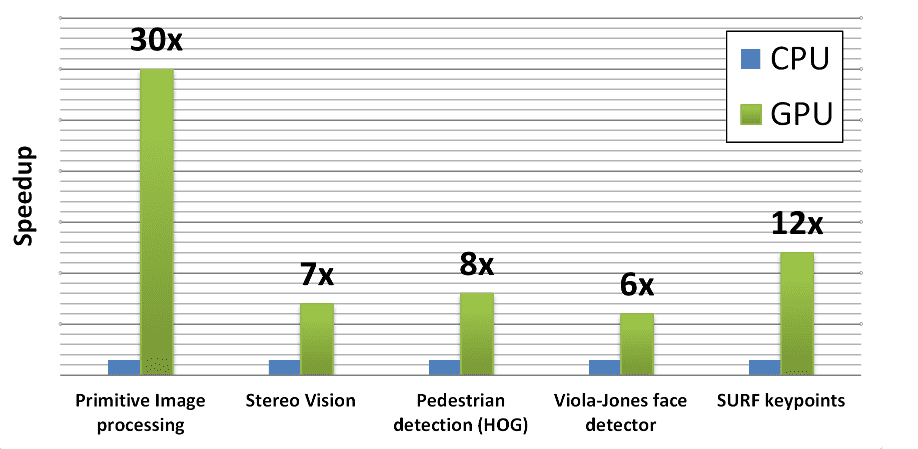

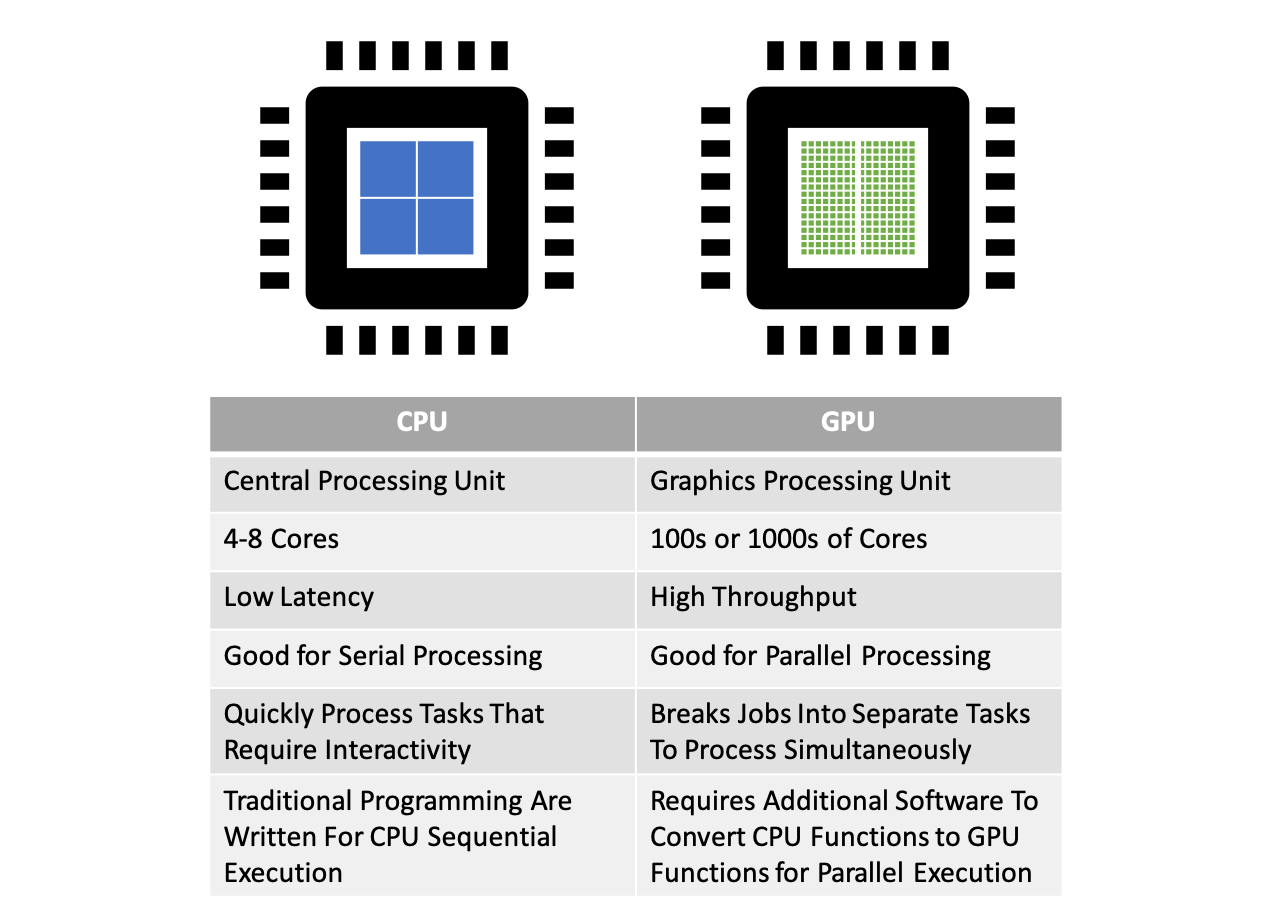

Parallel Computing — Upgrade Your Data Science with GPU Computing | by Kevin C Lee | Towards Data Science

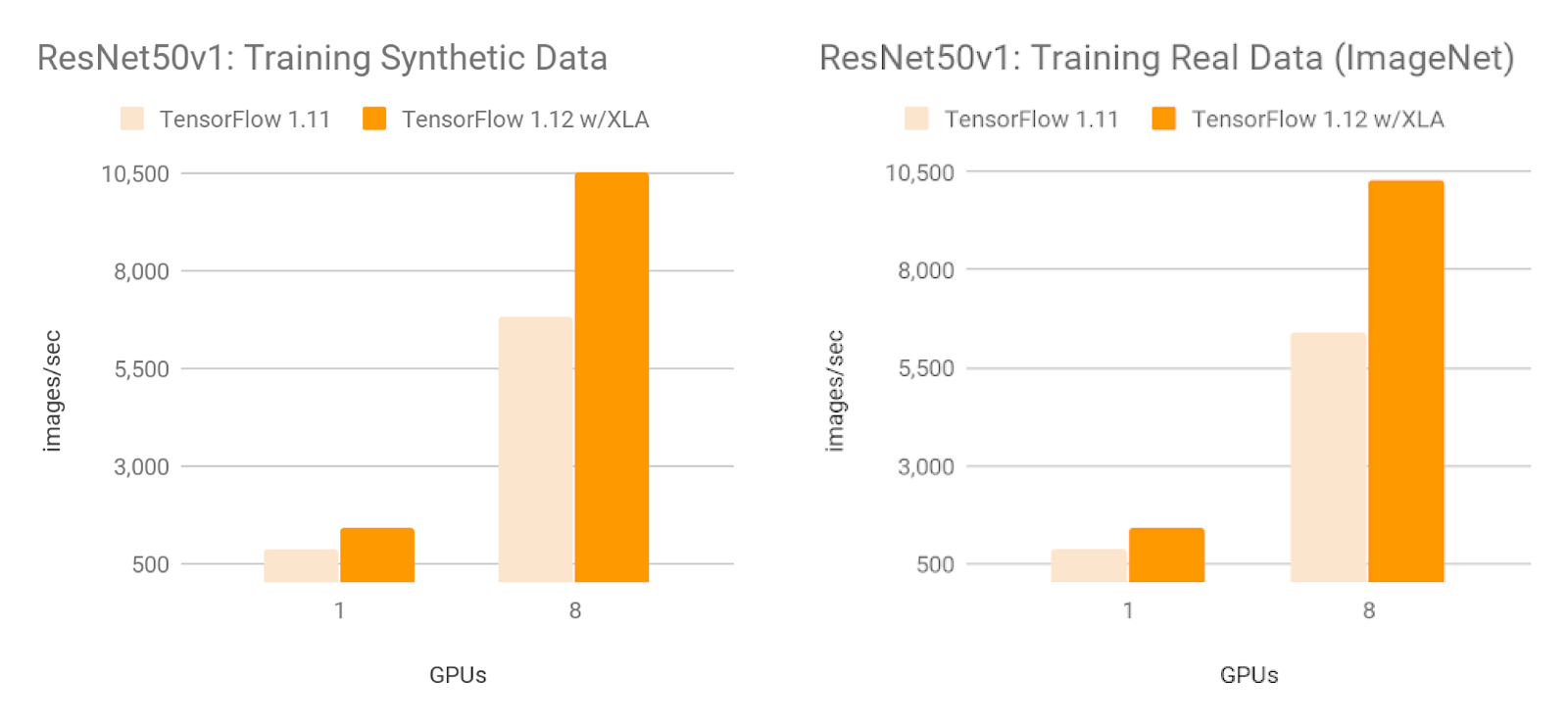

Accelerate computer vision training using GPU preprocessing with NVIDIA DALI on Amazon SageMaker | AWS Machine Learning Blog

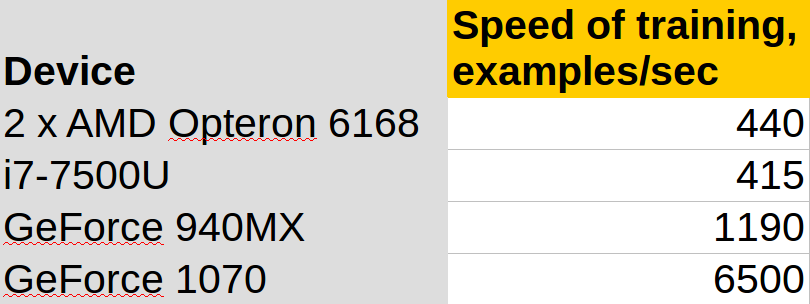

Analyzing CPU vs. GPU performance for AWS Machine Learning with Cloud Academy Hands-on Lab - YouTube

3.1. Comparison of CPU/GPU time required to achieve SS by Python and... | Download Scientific Diagram